18 Leading Big http://cashrebo805.trexgame.net/top-10-scratching-tools-in-2023-for-effective-information-extraction Information Tools And Modern Technologies To Understand About In 2023 Additionally, arrangement modifications can be done dynamically without impacting inquiry performance or data schedule. HPCC Equipments is a big information handling system created by LexisNexis before being open sourced in 2011. True to its complete name-- High-Performance Computer Collection Systems-- the modern technology is, at its core, a cluster of computer systems constructed from asset hardware to process, handle and provide big data. Hive runs on top of Hadoop and is made use of to refine structured information; more specifically, it's used for information summarization and evaluation, in addition to for quizing big quantities of information. Furthermore, there are several open source huge data tools, several of which are additionally offered in commercial variations or as part of big data systems and managed services. Below are 18 preferred open source tools and technologies for managing and examining big information, provided in indexed order with a recap of their vital functions and abilities. To keep up with these requirements, a myriad of cutting-edge technologies have actually been established that supply infrastructure to take care of such enormous quantities of information. As we've pointed out previously, information is just a piece of raw info. Nonetheless, it can come to be a wonderful resource of value when evaluated for relevant service needs. Anticipating analysis is a mix of data, machine learning, and pattern recognition, and its major target is the prediction of future possibilities and fads. Last but not least, just 12.7% of individuals said their companies spent greater than $500 million. Before we provide you some numbers on just how individuals produce information on Twitter and facebook, we intended to paint a picture of general social networks usage initially. Global Internet Index released a piece on the average number of social accounts. In the future, global corporations must start creating services and products that catch data to monetize it successfully. Market 4.0 will certainly be relying much more on huge information and analytics, cloud infrastructure, artificial intelligence, machine learning, and the Net of Things in the future. Cloud computing is the most reliable method for companies to deal with the ever-increasing volumes of data needed for big information analytics. Cloud computing enables modern-day enterprises to harvest and process huge amounts of information. In 2019, the global big information analytics market revenue was around $15 billion.

- In addition, the brand-new system will help McKesson evolve from detailed to anticipating and authoritative analytics.Interior data monetization techniques consist of making use of readily available data collections to measure company performance to enhance decision-making and the overall performance of global business.In 2021, it was anticipated that the overall amount of data created worldwide would certainly get to 79 zettabytes.Assistance for running machine learning algorithms against kept information sets for anomaly detection.Since 2013, a tremendous 64% of the international monetary market had actually already incorporated Big Information as a component of their framework.Different analytics devices offered on the market today, aid in addressing large information obstacles by supplying methods for keeping this information, procedure this data and make important insights from this data.

Large Data/ai Modern Technologies Were Adopted By 485% Of Companies In The Us In 2021

As businesses remain to see large data's enormous value, 96% will want to utilize specialists in the field. While going through various big information stats, we found that back in 2009 Netflix spent $1 million in enhancing its referral algorithm. What's much more intriguing is that the company's budget for innovation and development stood at $651 million in 2015. According to the most up to date Digital record, web users spent 6 hours and 42 mins online which clearly shows rapid huge information growth. So, if each of the 4.39 billion internet customers spent 6 hours and 42 mins online daily, we have actually invested 1.2 billion years online.News Release Media Center - News Release Media Center Northwest

News Release Media Center.

Posted: Thu, 19 Oct 2023 15:02:05 GMT [source]

Challenges Associated With Large Information

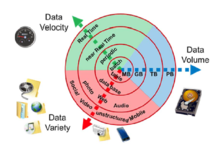

The fundamental needs for working with large data coincide as the requirements for collaborating with datasets of any dimension. However, the large range, the speed of consuming and refining, and the attributes of the data. that have to be dealt with at each stage of the procedure existing substantial brand-new obstacles when developing solutions. The objective of a lot of big data systems is to surface insights and connections from large volumes of heterogeneous information that would certainly not be possible using conventional approaches. With generative AI, expertise monitoring groups can automate understanding capture and maintenance procedures. In less complex terms, Kafka is a framework for saving, checking out and evaluating streaming information. Surprisingly, One that finds one collection of data as big data can be standard data for others so absolutely it can not be bounded in words yet freely can be explained via various examples. I make sure by the end of the write-up you will certainly be able to address the concern on your own. TikTok dropshipping is a business design that makes use of the TikTok system to generate sales by advertising and marketing products that are being provided via an on the internet dropshipping shop. Dropshipping lets people market items from third-party distributors without being called for to hold or deliver supply themselves. While better analysis is a favorable, huge data can also develop overload and sound, decreasing its usefulness. Business must manage larger volumes of information and determine which information stands for signals compared to sound.‘Innovation is the backbone of a good product design’: Martin Uhlarik - Autocar Professional

‘Innovation is the backbone of a good product design’: Martin Uhlarik.

Posted: Fri, 20 Oct 2023 07:54:22 GMT [source]